R利剑NoSQL系列文章 之 Hive

第四篇 R利剑Hive,分为5个章节。

- Hive先容

- Hive安装

- RHive安装

- RHive函数库

- RHive根基利用操纵

1. Hive先容

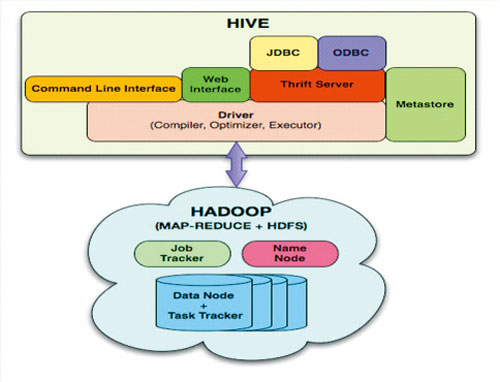

Hive是成立在Hadoop上的数据客栈基本构架。它提供了一系列的东西,可以用来举办数据提取转化加载(ETL),这是一种可以存储、查询和分

析存储在 Hadoop 中的大局限数据的机制。Hive 界说了简朴的类 SQL 查询语言,称为 HQL,它答允熟悉 SQL

的用户查询数据。同时,这个语言也答允熟悉 MapReduce 开拓者的开拓自界说的 mapper 和 reducer 来处理惩罚内建的 mapper

和 reducer 无法完成的巨大的阐明事情。

Hive 没有专门的数据名目。 Hive 可以很好的事情在 Thrift 之上,节制脱离符,也答允用户指定命据名目

上面内容摘自 百度百科(http://baike.baidu.com/view/699292.htm)

Hive与干系数据库的区别:

2. Hive安装

Hive是基于Hadoop开拓的数据客栈产物,所以首先我们要先有Hadoop的情况。

Hadoop安装,请参考:Hadoop情况搭建, 建设Hadoop母体虚拟机

Hive的安装,请参考:Hive安装及利用攻略

Hadoop-1.0.3的下载地点

http://archive.apache.org/dist/hadoop/core/hadoop-1.0.3/

Hive-0.9.0的下载地点

http://archive.apache.org/dist/hive/hive-0.9.0/

Hive安装好后

启动hiveserver的处事

~ nohup hive --service hiveserver &

Starting Hive Thrift Server打开hive shell~ hive shell

Logging initialized using configuration in file:/home/conan/hadoop/hive-0.9.0/conf/hive-log4j.proper ties

Hive history file=/tmp/conan/hive_job_log_conan_201306261459_153868095.txt

#查察hive的表

hive> show tables;

hive_algo_t_account

o_account

r_t_account

Time taken: 2.12 seconds

#查察o_account表的数据

hive> select * from o_account;

1 [email protected] 2013-04-22 12:21:39

2 [email protected] 2013-04-22 12:21:39

3 [email protected] 2013-04-22 12:21:39

4 [email protected] 2013-04-22 12:21:39

5 [email protected] 2013-04-22 12:21:39

6 [email protected] 2013-04-22 12:21:39

7 [email protected] 2013-04-23 09:21:24

8 [email protected] 2013-04-23 09:21:24

9 [email protected] 2013-04-23 09:21:24

10 [email protected] 2013-04-23 09:21:24

11 [email protected] 2013-04-23 09:21:24

Time taken: 0.469 seconds3. RHive安装

请提前设置好Java的情况:

~ java -version

java version "1.6.0_29"

Java(TM) SE Runtime Environment (build 1.6.0_29-b11)

Java HotSpot(TM) 64-Bit Server VM (build 20.4-b02, mixed mode)

安装R:Ubuntu 12.04,请更新源再下载R2.15.3版本

~ sudo sh -c "echo deb http://mirror.bjtu.edu.cn/cran/bin/Linux/ubuntu precise/ >>/etc/apt/sources.list"

~ sudo apt-get update

~ sudo apt-get install r-base-core=2.15.3-1precise0precise1

安装R依赖库:rjava

#设置rJava

~ sudo R CMD javareconf

#启动R措施

~ sudo R

install.packages("rJava")

安装RHive

install.packages("RHive")

library(RHive)

Loading required package: rJava

Loading required package: Rserve

This is RHive 0.0-7. For overview type ‘?RHive’.

HIVE_HOME=/home/conan/hadoop/hive-0.9.0

call rhive.init() because HIVE_HOME is set.

4. RHive函数库

rhive.aggregate rhive.connect rhive.hdfs.exists rhive.mapapply

rhive.assign rhive.desc.table rhive.hdfs.get rhive.mrapply

rhive.basic.by rhive.drop.table rhive.hdfs.info rhive.napply

rhive.basic.cut rhive.env rhive.hdfs.ls rhive.query

rhive.basic.cut2 rhive.exist.table rhive.hdfs.mkdirs rhive.reduceapply

rhive.basic.merge rhive.export rhive.hdfs.put rhive.rm

rhive.basic.mode rhive.exportAll rhive.hdfs.rename rhive.sapply

rhive.basic.range rhive.hdfs.cat rhive.hdfs.rm rhive.save

rhive.basic.scale rhive.hdfs.chgrp rhive.hdfs.tail rhive.script.export

rhive.basic.t.test rhive.hdfs.chmod rhive.init rhive.script.unexport

rhive.basic.xtabs rhive.hdfs.chown rhive.list.tables

rhive.size.table

rhive.big.query rhive.hdfs.close rhive.load rhive.write.table

rhive.block.sample rhive.hdfs.connect rhive.load.table

rhive.close rhive.hdfs.du rhive.load.table2

Hive和RHive的根基操纵比拟:

#毗连到hive

Hive: hive shell

RHive: rhive.connect("192.168.1.210")

#列出所有hive的表

Hive: show tables;

RHive: rhive.list.tables()

#查察表布局

Hive: desc o_account;

RHive: rhive.desc.table('o_account'), rhive.desc.table('o_account',TRUE)

#执行HQL查询

Hive: select * from o_account;

RHive: rhive.query('select * from o_account')

#查察hdfs目次

Hive: dfs -ls /;

RHive: rhive.hdfs.ls()

#查察hdfs文件内容

Hive: dfs -cat /user/hive/warehouse/o_account/part-m-00000;

RHive: rhive.hdfs.cat('/user/hive/warehouse/o_account/part-m-00000')

#断开毗连

Hive: quit;

RHive: rhive.close()

5. RHive根基利用操纵

#初始化

rhive.init()

#毗连hive

rhive.connect("192.168.1.210")

#查察所有表

rhive.list.tables()

tab_name

1 hive_algo_t_account

2 o_account

3 r_t_account

#查察表布局

rhive.desc.table('o_account');

col_name data_type comment

1 id int

2 email string

3 create_date string

#执行HQL查询

rhive.query("select * from o_account");

id email create_date

1 1 [email protected] 2013-04-22 12:21:39

2 2 [email protected] 2013-04-22 12:21:39

3 3 [email protected] 2013-04-22 12:21:39

4 4 [email protected] 2013-04-22 12:21:39

5 5 [email protected] 2013-04-22 12:21:39

6 6 [email protected] 2013-04-22 12:21:39

7 7 [email protected] 2013-04-23 09:21:24

8 8 [email protected] 2013-04-23 09:21:24

9 9 [email protected] 2013-04-23 09:21:24

10 10 [email protected] 2013-04-23 09:21:24

11 11 [email protected] 2013-04-23 09:21:24

#封锁毗连

rhive.close()

[1] TRUE

#p#分页标题#e#建设姑且表

rhive.block.sample('o_account', subset="id<5")

[1] "rhive_sblk_1372238856"

rhive.query("select * from rhive_sblk_1372238856");

id email create_date

1 1 [email protected] 2013-04-22 12:21:39

2 2 [email protected] 2013-04-22 12:21:39

3 3 [email protected] 2013-04-22 12:21:39

4 4 [email protected] 2013-04-22 12:21:39

#查察hdfs的文件

rhive.hdfs.ls('/user/hive/warehouse/rhive_sblk_1372238856/')

permission owner group length modify-time

1 rw-r--r-- conan supergroup 141 2013-06-26 17:28

file

1 /user/hive/warehouse/rhive_sblk_1372238856/000000_0

rhive.hdfs.cat('/user/hive/warehouse/rhive_sblk_1372238856/000000_0')

[email protected] 12:21:39

[email protected] 12:21:39

[email protected] 12:21:39

[email protected] 12:21:39

按范畴支解字段数据

rhive.basic.cut('o_account','id',breaks='0:100:3')

[1] "rhive_result_20130626173626"

attr(,"result:size")

[1] 443

rhive.query("select * from rhive_result_20130626173626");

email create_date id

1 [email protected] 2013-04-22 12:21:39 (0,3]

2 [email protected] 2013-04-22 12:21:39 (0,3]

3 [email protected] 2013-04-22 12:21:39 (0,3]

4 [email protected] 2013-04-22 12:21:39 (3,6]

5 [email protected] 2013-04-22 12:21:39 (3,6]

6 [email protected] 2013-04-22 12:21:39 (3,6]

7 [email protected] 2013-04-23 09:21:24 (6,9]

8 [email protected] 2013-04-23 09:21:24 (6,9]

9 [email protected] 2013-04-23 09:21:24 (6,9]

10 [email protected] 2013-04-23 09:21:24 (9,12]

11 [email protected] 2013-04-23 09:21:24 (9,12]

Hive操纵HDFS

#查察hdfs文件目次

rhive.hdfs.ls()

permission owner group length modify-time file

1 rwxr-xr-x conan supergroup 0 2013-04-24 01:52 /HBase

2 rwxr-xr-x conan supergroup 0 2013-06-23 10:59 /home

3 rwxr-xr-x conan supergroup 0 2013-06-26 11:18 /rhive

4 rwxr-xr-x conan supergroup 0 2013-06-23 13:27 /tmp

5 rwxr-xr-x conan supergroup 0 2013-04-24 19:28 /user

#查察hdfs文件内容

rhive.hdfs.cat('/user/hive/warehouse/o_account/part-m-00000')

[email protected] 12:21:39

[email protected] 12:21:39

[email protected] 12:21:39