R语言与函数预计进修条记(核要领与局部多项式)

非参数要领

用于函数预计的非参数要领大抵上有三种:核要领、局部多项式要领、样条要领。

非参的函数预计的利益在于稳健,对模子没有什么特定的假设,只是认为函数平滑,制止了模子选择带来的风险;可是,表达式巨大,难以表明,计较劲大长短参的一个很大的短处。所以说利用非参有风险,选择需审慎。

非参的想法很简朴:函数在视察到的点取视察值的概率较大,用x四周的值通过加权平均的步伐预计函数( f(x) )的值。

核要领

当加权的权重是某一函数的核(关于“核”的说法可拜见之前的博文《R语言与非参数统计(核密度预计)》),这种要领就是核要领,常见的有Nadaraya-Watson核预计与Gasser-Muller核预计要领,也就是许多课本里谈到的NW核预计与GM核预计,这里我们照旧不谈核的选择,将一切的核预计都默认用Gauss核处理惩罚。

NW核预计形式为:[ hat f_h(x)=frac{sum_{i=1}^n K_h(x_i-x)y_i}{sum_{i=1}^n K_h(x_i-x)} ]GM核预计形式为:[ hat f_h(x)=sum_{i=1}^n y_i int_{s_{i-1}}^{s_i} K_h(u-x)du ]式中( s_i=(x_i+x_{i+1})/2,x_0=-infty,x_{n+1}=infty )。

x <- seq(-1, 1, length = 20)

y <- 5 * x * cos(5 * pi * x)

h <- 0.088

fx.hat <- function(z, h) {

dnorm((z - x)/h)/h

}

KSMOOTH <- function(h, y, x) {

n <- length(y)

s.hat <- rep(0, n)

for (i in 1:n) {

a <- fx.hat(x[i], h)

s.hat[i] <- sum(y * a/sum(a))

}

return(s.hat)

}

ksmooth.val <- KSMOOTH(h, y, x)

plot(x, y, xlab = "Predictor", ylab = "Response")

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1, ylim = c(-15.5, 15.5), lty = 2, add = T)

lines(x, ksmooth.val, type = "l")

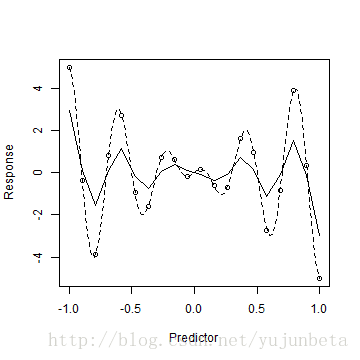

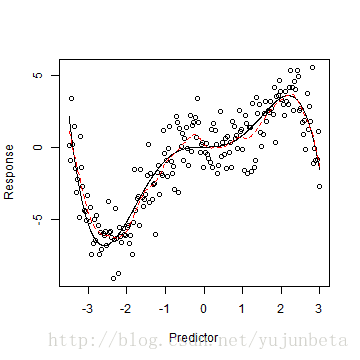

可以看出,核要领根基预计出了函数的形状,可是结果不太好,这个是由于样本点过少导致的,我们可以将样本容量扩大一倍,看看结果:

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

h <- 0.055

fx.hat <- function(z, h) {

dnorm((z - x)/h)/h

}

NWSMOOTH <- function(h, y, x) {

n <- length(y)

s.hat <- rep(0, n)

for (i in 1:n) {

a <- fx.hat(x[i], h)

s.hat[i] <- sum(y * a/sum(a))

}

return(s.hat)

}

NWsmooth.val <- NWSMOOTH(h, y, x)

plot(x, y, xlab = "Predictor", ylab = "Response", col = 1)

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1, ylim = c(-15.5, 15.5), lty = 1, add = T, col = 1)

lines(x, NWsmooth.val, lty = 2, col = 2)

A <- data.frame(x = seq(-1, 1, length = 1000))

model.linear <- lm(y ~ poly(x, 9))

lines(seq(-1, 1, length = 1000), predict(model.linear, A), lty = 3, col = 3)

letters <- c("NW method", "orignal model", "9 order poly-reg")

legend("bottomright", legend = letters, lty = c(2, 1, 3), col = c(2, 1, 3),

cex = 0.5)

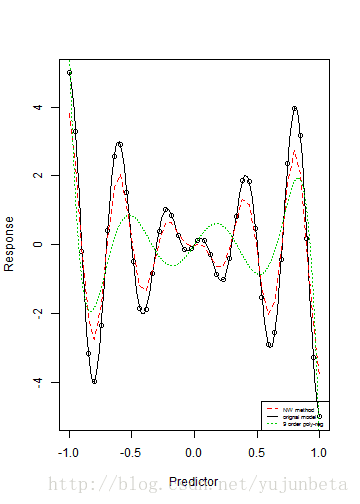

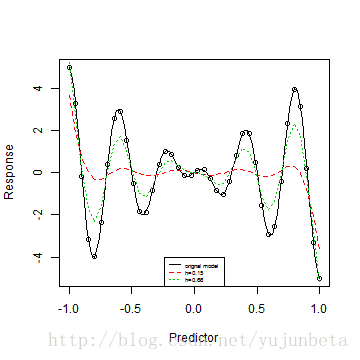

可以看到预计结果照旧很好的,至少比基函数的步伐要好一些。那么我们来看看GM核要领:

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

h <- 0.055

GMSMOOTH <- function(y, x, h) {

n <- length(y)

s <- c(-Inf, 0.5 * (x[-n] + x[-1]), Inf)

s.hat <- rep(0, n)

for (i in 1:n) {

fx.hat <- function(z, h, x) {

dnorm((x - z)/h)/h

}

a <- y[i] * integrate(fx.hat, s[i], s[i + 1], h = h, x = x[i])$value

s.hat[i] <- sum(a)

}

return(s.hat)

}

GMsmooth.val <- GMSMOOTH(y, x, h)

plot(x, y, xlab = "Predictor", ylab = "Response", col = 1)

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1, ylim = c(-15.5, 15.5), lty = 1, add = T, col = 1)

lines(x, GMsmooth.val, lty = 2, col = 2)

A <- data.frame(x = seq(-1, 1, length = 1000))

model.linear <- lm(y ~ poly(x, 9))

lines(seq(-1, 1, length = 1000), predict(model.linear, A), lty = 3, col = 3)

letters <- c("GM method", "orignal model", "9 order poly-reg")

legend("bottomright", legend = letters, lty = c(2, 1, 3), col = c(2, 1, 3),

cex = 0.5)

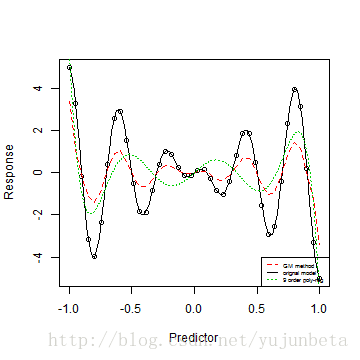

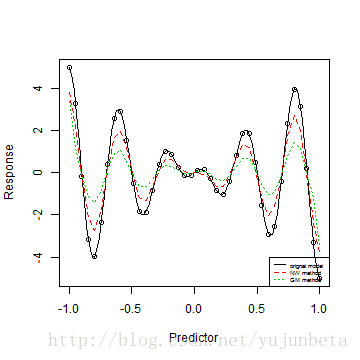

我们来看看两个核预计步伐的差别:

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

h <- 0.055

fx.hat <- function(z, h) {

dnorm((z - x)/h)/h

}

NWSMOOTH <- function(h, y, x) {

n <- length(y)

s.hat <- rep(0, n)

for (i in 1:n) {

a <- fx.hat(x[i], h)

s.hat[i] <- sum(y * a/sum(a))

}

return(s.hat)

}

NWsmooth.val <- NWSMOOTH(h, y, x)

GMSMOOTH <- function(y, x, h) {

n <- length(y)

s <- c(-Inf, 0.5 * (x[-n] + x[-1]), Inf)

s.hat <- rep(0, n)

for (i in 1:n) {

fx.hat <- function(z, h, x) {

dnorm((x - z)/h)/h

}

a <- y[i] * integrate(fx.hat, s[i], s[i + 1], h = h, x = x[i])$value

s.hat[i] <- sum(a)

}

return(s.hat)

}

GMsmooth.val <- GMSMOOTH(y, x, h)

plot(x, y, xlab = "Predictor", ylab = "Response", col = 1)

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1, ylim = c(-15.5, 15.5), lty = 1, add = T, col = 1)

lines(x, NWsmooth.val, lty = 2, col = 2)

lines(x, GMsmooth.val, lty = 3, col = 3)

letters <- c("orignal model", "NW method", "GM method")

legend("bottomright", legend = letters, lty = 1:3, col = 1:3, cex = 0.5)

#p#分页标题#e#

从图中可以看到NW预计的方差好像小些,事实也确实如此,GM预计的渐进方差约为NW预计的1.5倍。可是GM预计办理了一些计较的困难。

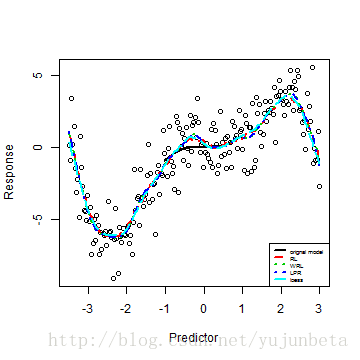

我们最后照旧来展示差异窗宽的选择对预计的影响(这里以NW预计为例):

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

fx.hat <- function(z, h) {

dnorm((z - x)/h)/h

}

NWSMOOTH <- function(h, y, x) {

n <- length(y)

s.hat <- rep(0, n)

for (i in 1:n) {

a <- fx.hat(x[i], h)

s.hat[i] <- sum(y * a/sum(a))

}

return(s.hat)

}

h <- 0.025

NWsmooth.val0 <- NWSMOOTH(h, y, x)

h <- 0.05

NWsmooth.val1 <- NWSMOOTH(h, y, x)

h <- 0.1

NWsmooth.val2 <- NWSMOOTH(h, y, x)

h <- 0.2

NWsmooth.val3 <- NWSMOOTH(h, y, x)

h <- 0.3

NWsmooth.val4 <- NWSMOOTH(h, y, x)

plot(x, y, xlab = "Predictor", ylab = "Response", col = 1)

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1, ylim = c(-15.5, 15.5), lty = 1, add = T, col = 1)

lines(x, NWsmooth.val0, lty = 2, col = 2)

lines(x, NWsmooth.val1, lty = 3, col = 3)

lines(x, NWsmooth.val2, lty = 4, col = 4)

lines(x, NWsmooth.val3, lty = 5, col = 5)

lines(x, NWsmooth.val4, lty = 6, col = 6)

letters <- c("orignal model", "h=0.025", "h=0.05", "h=0.1", "h=0.2", "h=0.3")

legend("bottom", legend = letters, lty = 1:6, col = 1:6, cex = 0.5)

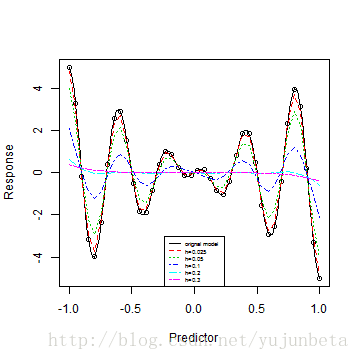

可以看到窗宽越大,预计越平滑,误差越大,窗宽越小,预计越不仅滑,但拟合优度有提高,却也容易过拟合。

窗宽怎么选,一个可行的步伐就是CV,通俗的讲就是取一个视察做测试集,剩下的做练习集,看NMSE。R代码如下:

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

# h<-0.055

NWSMOOTH <- function(h, y, x, z) {

n <- length(y)

s.hat <- rep(0, n)

s.hat1 <- rep(0, n)

for (i in 1:n) {

s.hat[i] <- dnorm((x[i] - z)/h)/h * y[i]

s.hat1[i] <- dnorm((x[i] - z)/h)/h

}

z.hat <- sum(s.hat)/sum(s.hat1)

return(z.hat)

}

CVRSS <- function(h, y, x) {

cv <- NULL

for (i in seq(x)) {

cv[i] <- (y[i] - NWSMOOTH(h, y[-i], x[-i], x[i]))^2

}

mean(cv)

}

h <- seq(0.01, 0.2, by = 0.005)

cvrss.val <- rep(0, length(h))

for (i in seq(h)) {

cvrss.val[i] <- CVRSS(h[i], y, x)

}

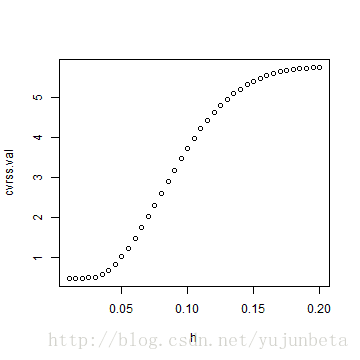

plot(h, cvrss.val, type = "b")

从图中我们可以见到CV值在0.01到0.05的变革都不大,这时,我们应该选择较大的h,使得函数较为平滑,可是0.05后,cv变革较大,说明我们选择窗宽也不能过大,不然也会出短处的。那么是不是h越小越好呢?固然上面一个例子给了我们这样一个错觉,我们下面这个例子就可以冲破它,数据来自《computational statistics》一书的essay data一例。

easy <- read.table("D:/R/data/easysmooth.dat", header = T)

x <- easy$X

y <- easy$Y

NWSMOOTH <- function(h, y, x, z) {

n <- length(y)

s.hat <- rep(0, n)

s.hat1 <- rep(0, n)

for (i in 1:n) {

s.hat[i] <- dnorm((x[i] - z)/h)/h * y[i]

s.hat1[i] <- dnorm((x[i] - z)/h)/h

}

z.hat <- sum(s.hat)/sum(s.hat1)

return(z.hat)

}

CVRSS <- function(h, y, x) {

cv <- NULL

for (i in seq(x)) {

cv[i] <- (y[i] - NWSMOOTH(h, y[-i], x[-i], x[i]))^2

}

mean(cv)

}

h <- seq(0.01, 0.3, by = 0.02)

cvrss.val <- rep(0, length(h))

for (i in seq(h)) {

cvrss.val[i] <- CVRSS(h[i], y, x)

}

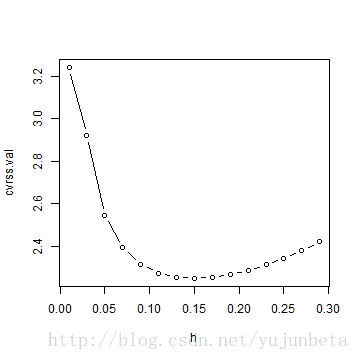

plot(h, cvrss.val, type = "b")

#p#分页标题#e#

从上图就可以看到,较佳窗宽约为0.15,而不是0.01.

和树回归雷同,我们的核要领就是提供了一个常数来渐进这个函数,我们这里仍然可以思量模子树的想法,用一阶可能高阶多项式来作加权预计,这就有结局部多项式预计。

局部多项式

预计的想法是认为未知函数( f(x) )在近邻邻域内可由某一多项式近似(这是Taylor公式的功效),在( x_0 )的邻域内最小化:[ arg underset{beta_0,cdots,beta_p}{min}sum_{i=1}^n[y_i-sum_{j=0}^p beta_j(x_i-x_0)^j]^2 K_h(x_i-x_0) ]详细做法为:

(1)对付每个xi,以该点为中心,计较出对应点处的权重( K_h(x_i-x) );

(2)回收加权最小二乘法(WLS)预计其参数,并用获得的模子预计该结点对应的x值对应y值,作为y|xi的预计值(只要这一个点的预计值);

(3)预计下一个点xj;

(4)将每个y|xi的预计值毗连起来。

我们这里以《computational statistics》一书的essay data为例来说明局部多项式预计

easy <- read.table("D:/R/data/easysmooth.dat", header = T)

x <- easy$X

y <- easy$Y

h <- 0.16

## FUNCTIONS USES HAT MATRIX

LPRSMOOTH <- function(y, x, h) {

n <- length(y)

s.hat <- rep(0, n)

for (i in 1:n) {

weight <- dnorm((x - x[i])/h)

mod <- lm(y ~ x, weights = weight)

s.hat[i] <- as.numeric(predict(mod, data.frame(x = x[i])))

}

return(s.hat)

}

lprsmooth.val <- LPRSMOOTH(y, x, h)

s <- function(x) {

(x^3) * sin((x + 3.4)/2)

}

x.plot <- seq(min(x), max(x), length.out = 1000)

y.plot <- s(x.plot)

plot(x, y, xlab = "Predictor", ylab = "Response")

lines(x.plot, y.plot, lty = 1, col = 1)

lines(x, lprsmooth.val, lty = 2, col = 2)

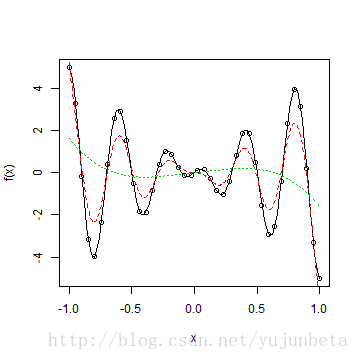

我们回到最开始我们提到的三角函数的例子,我们可以看到:

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

## FUNCTIONS

LPRSMOOTH <- function(y, x, h) {

n <- length(y)

s.hat <- rep(0, n)

for (i in 1:n) {

weight <- dnorm((x - x[i])/h)

mod <- lm(y ~ x, weights = weight)

s.hat[i] <- as.numeric(predict(mod, data.frame(x = x[i])))

}

return(s.hat)

}

h <- 0.15

lprsmooth.val1 <- LPRSMOOTH(y, x, h)

h <- 0.066

lprsmooth.val2 <- LPRSMOOTH(y, x, h)

plot(x, y, xlab = "Predictor", ylab = "Response")

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1, ylim = c(-15.5, 15.5), lty = 1, add = T, col = 1)

lines(x, lprsmooth.val1, lty = 2, col = 2)

lines(x, lprsmooth.val2, lty = 3, col = 3)

letters <- c("orignal model", "h=0.15", "h=0.66")

legend("bottom", legend = letters, lty = 1:3, col = 1:3, cex = 0.5)

R中提供了许多的函数包来做非参数回归,常用的有:KernSmooth包的函数locpoly(),locpol的locpol(),locCteSmootherC()以及locfit的locfit().详细的参数配置较为简朴,这里就不多说了。我们以开篇的三角函数模子的例子为例来看看如何利用它们:

library(KernSmooth) #函数locpoly()

library(locpol) #locpol(); locCteSmootherC()

library(locfit) #locfit()

x <- seq(-1, 1, length = 40)

y <- 5 * x * cos(5 * pi * x)

f <- function(x) 5 * x * cos(5 * pi * x)

curve(f, -1, 1)

points(x, y)

fit <- locpoly(x, y, bandwidth = 0.066)

lines(fit, lty = 2, col = 2)

d <- data.frame(x = x, y = y)

r <- locfit(y ~ x, d) #一般来说,locfit函数自动选的窗宽偏大,函数较平滑

lines(r, lty = 3, col = 3)

xeval <- seq(-1, 1, length = 10)

cuest <- locCuadSmootherC(d$x, d$y, xeval, 0.066, gaussK)

cuest

## x beta0 beta1 beta2 den

## 1 -1.0000 5.0858 -35.152 -222.58 0.07571

## 2 -0.7778 -3.3233 11.966 514.42 4.22454

## 3 -0.5556 1.9804 -16.219 -279.47 4.26349

## 4 -0.3333 -0.8416 13.137 83.37 4.26349

## 5 -0.1111 0.1924 -4.983 27.90 4.26349

## 6 0.1111 -0.1924 -4.983 -27.90 4.26349

## 7 0.3333 0.8416 13.137 -83.37 4.26349

## 8 0.5556 -1.9804 -16.219 279.47 4.26349

## 9 0.7778 3.3233 11.966 -514.42 4.22454

## 10 1.0000 -5.0858 -35.152 222.58 0.07571

#p#分页标题#e#

关于局部多项式预计的想法尚有许多,好比我们只思量近邻的数据作最小二乘预计,再好比我们可以修改权重,使得我们的窗宽与近邻点的间隔有关,再好比,我们可以思量不回收最小二乘做优化,而是对最小二乘加上一点点的处罚……我们将第一个想法称之为running line,第二个想法称之为loess,第三个想法依据你的处罚方法差异有差异的说法。我们将running line的R措施给出如下:

RLSMOOTH <- function(k, y, x) {

n <- length(y)

s.hat <- rep(0, n)

b <- (k - 1)/2

if (k > 1) {

for (i in 1:(b + 1)) {

xi <- x[1:(b + i)]

xi <- cbind(rep(1, length(xi)), xi)

hi <- xi %*% solve(t(xi) %*% xi) %*% t(xi)

s.hat[i] <- y[1:(b + i)] %*% hi[i, ]

xi <- x[(n - b - i + 1):n]

xi <- cbind(rep(1, length(xi)), xi)

hi <- xi %*% solve(t(xi) %*% xi) %*% t(xi)

s.hat[n - i + 1] <- y[(n - b - i + 1):n] %*% hi[nrow(hi) - i + 1,

]

}

for (i in (b + 2):(n - b - 1)) {

xi <- x[(i - b):(i + b)]

xi <- cbind(rep(1, length(xi)), xi)

hi <- xi %*% solve(t(xi) %*% xi) %*% t(xi)

s.hat[i] <- y[(i - b):(i + b)] %*% hi[b + 1, ]

}

}

if (k == 1) {

s.hat <- y

}

return(s.hat)

}

我们也一样可以对running line做局部多项式回归,代码如下:

WRLSMOOTH <- function(k, y, x, h) {

n <- length(y)

s.hat <- rep(0, n)

b <- (k - 1)/2

if (k > 1) {

for (i in 1:(b + 1)) {

xi <- x[1:(b + i)]

xi <- cbind(rep(1, length(xi)), xi)

hi <- xi %*% solve(t(xi) %*% xi) %*% t(xi)

s.hat[i] <- y[1:(b + i)] %*% hi[i, ]

xi <- x[(n - b - i + 1):n]

xi <- cbind(rep(1, length(xi)), xi)

hi <- xi %*% solve(t(xi) %*% xi) %*% t(xi)

s.hat[n - i + 1] <- y[(n - b - i + 1):n] %*% hi[nrow(hi) - i + 1,

]

}

for (i in (b + 2):(n - b - 1)) {

xi <- x[(i - b):(i + b)]

weight <- dnorm((xi - x[i])/h)

mod <- lm(y[(i - b):(i + b)] ~ xi, weights = weight)

s.hat[i] <- as.numeric(predict(mod, data.frame(xi = x[i])))

}

}

if (k == 1) {

s.hat <- y

}

return(s.hat)

}

R中也提供了函数lowess()来做loess回归。我们这里不妨以essay data为例,看看这三个预计有多大的不同: