浅谈中文文本自动纠错在影视剧搜索中应用与Java实现

副标题#e#

1.配景:

这周由于项目需要对搜索框中输入的错误影片名举办校正处理惩罚,以晋升搜索掷中率和用户体验,研究了一下中文文本自动纠错(专业点讲是校对,proofread),并劈头实现了该成果,特此记录。

2.简介:

中文输入错误的校对与矫正是指在输入不常见可能错误文字时系统提示文字有误,最简朴的例子就是在word里打字时会有赤色下划线提示。实现该成果今朝主要有两大思路:

(1) 基于大量字典的分词法:主要是将待阐明的汉字串与一个很大的“呆板辞书”中的词条举办匹配,若在辞书中找到则匹配乐成;该要领易于实现,较量合用于输入的汉字串

属于某个或某几个规模的名词或名称;

(2) 基于统计信息的分词法:常用的是N-Gram语言模子,其实就是N-1阶Markov(马尔科夫)模子;在此简介一下该模子:

上式是Byes公式,表白字符串X1X2……Xm呈现的概率是每个字单独呈现的条件概率之积,为了简化计较假设字Xi的呈现仅与前面紧挨着的N-1个字符有关,则上面的公式变为:

这就是N-1阶Markov(马尔科夫)模子,计较出概率后与一个阈值比拟,若小于该阈值则提示该字符串拼写有误。

3.实现:

由于本人项目针对的输入汉字串根基上是影视剧名称以及综艺动漫节目标名字,语料库的范畴相对不变些,所以这里回收2-Gram即二元语言模子与字典分词相团结的要领;

先说下思路:

对语料库举办分词处理惩罚 —>计较二元词条呈现概率(在语料库的样本下,用词条呈现的频率取代) —>看待阐明的汉字串分词并找出最大持续字符串和第二大持续字符串 —>

操作最大和第二大持续字符串与语料库的影片名称匹配—>部门匹配则现实拼写有误并返回矫正的字符串(所以字典很重要)

备注:分词这里用ICTCLAS Java API

上代码:

建设类ChineseWordProofread

3.1 初始化分词包并对影片语料库举办分词处理惩罚

public ICTCLAS2011 initWordSegmentation(){

ICTCLAS2011 wordSeg = new ICTCLAS2011();

try{

String argu = "F:\\Java\\workspace\\wordProofread"; //set your project path

System.out.println("ICTCLAS_Init");

if (ICTCLAS2011.ICTCLAS_Init(argu.getBytes("GB2312"),0) == false)

{

System.out.println("Init Fail!");

//return null;

}

/*

* 配置词性标注集

ID 代表词性集

1 计较所一级标注集

0 计较所二级标注集

2 北大二级标注集

3 北大一级标注集

*/

wordSeg.ICTCLAS_SetPOSmap(2);

}catch (Exception ex){

System.out.println("words segmentation initialization failed");

System.exit(-1);

}

return wordSeg;

}

public boolean wordSegmentate(String argu1,String argu2){

boolean ictclasFileProcess = false;

try{

//文件分词

ictclasFileProcess = wordSeg.ICTCLAS_FileProcess(argu1.getBytes("GB2312"), argu2.getBytes("GB2312"), 0);

//ICTCLAS2011.ICTCLAS_Exit();

}catch (Exception ex){

System.out.println("file process segmentation failed");

System.exit(-1);

}

return ictclasFileProcess;

}

3.2 计较词条(tokens)呈现的频率

public Map<String,Integer> calculateTokenCount(String afterWordSegFile){

Map<String,Integer> wordCountMap = new HashMap<String,Integer>();

File movieInfoFile = new File(afterWordSegFile);

BufferedReader movieBR = null;

try {

movieBR = new BufferedReader(new FileReader(movieInfoFile));

} catch (FileNotFoundException e) {

System.out.println("movie_result.txt file not found");

e.printStackTrace();

}

String wordsline = null;

try {

while ((wordsline=movieBR.readLine()) != null){

String[] words = wordsline.trim().split(" ");

for (int i=0;i<words.length;i++){

int wordCount = wordCountMap.get(words[i])==null ? 0:wordCountMap.get(words[i]);

wordCountMap.put(words[i], wordCount+1);

totalTokensCount += 1;

if (words.length > 1 && i < words.length-1){

StringBuffer wordStrBuf = new StringBuffer();

wordStrBuf.append(words[i]).append(words[i+1]);

int wordStrCount = wordCountMap.get(wordStrBuf.toString())==null ? 0:wordCountMap.get(wordStrBuf.toString());

wordCountMap.put(wordStrBuf.toString(), wordStrCount+1);

totalTokensCount += 1;

}

}

}

} catch (IOException e) {

System.out.println("read movie_result.txt file failed");

e.printStackTrace();

}

return wordCountMap;

}

#p#分页标题#e#

3.3 找出待阐明字符串中的正确tokens

public Map<String,Integer> calculateTokenCount(String afterWordSegFile){

Map<String,Integer> wordCountMap = new HashMap<String,Integer>();

File movieInfoFile = new File(afterWordSegFile);

BufferedReader movieBR = null;

try {

movieBR = new BufferedReader(new FileReader(movieInfoFile));

} catch (FileNotFoundException e) {

System.out.println("movie_result.txt file not found");

e.printStackTrace();

}

String wordsline = null;

try {

while ((wordsline=movieBR.readLine()) != null){

String[] words = wordsline.trim().split(" ");

for (int i=0;i<words.length;i++){

int wordCount = wordCountMap.get(words[i])==null ? 0:wordCountMap.get(words[i]);

wordCountMap.put(words[i], wordCount+1);

totalTokensCount += 1;

if (words.length > 1 && i < words.length-1){

StringBuffer wordStrBuf = new StringBuffer();

wordStrBuf.append(words[i]).append(words[i+1]);

int wordStrCount = wordCountMap.get(wordStrBuf.toString())==null ? 0:wordCountMap.get(wordStrBuf.toString());

wordCountMap.put(wordStrBuf.toString(), wordStrCount+1);

totalTokensCount += 1;

}

}

}

} catch (IOException e) {

System.out.println("read movie_result.txt file failed");

e.printStackTrace();

}

return wordCountMap;

}

3.4 获得最大持续和第二大持续字符串(也大概为单个字符)

#p#副标题#e#

public String[] getMaxAndSecondMaxSequnce(String[] sInputResult){

List<String> correctTokens = getCorrectTokens(sInputResult);

//TODO

System.out.println(correctTokens);

String[] maxAndSecondMaxSeq = new String[2];

if (correctTokens.size() == 0) return null;

else if (correctTokens.size() == 1){

maxAndSecondMaxSeq[0]=correctTokens.get(0);

maxAndSecondMaxSeq[1]=correctTokens.get(0);

return maxAndSecondMaxSeq;

}

String maxSequence = correctTokens.get(0);

String maxSequence2 = correctTokens.get(correctTokens.size()-1);

String littleword = "";

for (int i=1;i<correctTokens.size();i++){

//System.out.println(correctTokens);

if (correctTokens.get(i).length() > maxSequence.length()){

maxSequence = correctTokens.get(i);

} else if (correctTokens.get(i).length() == maxSequence.length()){

//select the word with greater probability for single-word

if (correctTokens.get(i).length()==1){

if (probBetweenTowTokens(correctTokens.get(i)) > probBetweenTowTokens(maxSequence)) {

maxSequence2 = correctTokens.get(i);

}

}

//select words with smaller probability for multi-word, because the smaller has more self information

else if (correctTokens.get(i).length()>1){

if (probBetweenTowTokens(correctTokens.get(i)) <= probBetweenTowTokens(maxSequence)) {

maxSequence2 = correctTokens.get(i);

}

}

} else if (correctTokens.get(i).length() > maxSequence2.length()){

maxSequence2 = correctTokens.get(i);

} else if (correctTokens.get(i).length() == maxSequence2.length()){

if (probBetweenTowTokens(correctTokens.get(i)) > probBetweenTowTokens(maxSequence2)){

maxSequence2 = correctTokens.get(i);

}

}

}

//TODO

System.out.println(maxSequence+" : "+maxSequence2);

//delete the sub-word from a string

if (maxSequence2.length() == maxSequence.length()){

int maxseqvaluableTokens = maxSequence.length();

int maxseq2valuableTokens = maxSequence2.length();

float min_truncate_prob_a = 0 ;

float min_truncate_prob_b = 0;

String aword = "";

String bword = "";

for (int i=0;i<correctTokens.size();i++){

float tokenprob = probBetweenTowTokens(correctTokens.get(i));

if ((!maxSequence.equals(correctTokens.get(i))) && maxSequence.contains(correctTokens.get(i))){

if ( tokenprob >= min_truncate_prob_a){

min_truncate_prob_a = tokenprob ;

aword = correctTokens.get(i);

}

}

else if ((!maxSequence2.equals(correctTokens.get(i))) && maxSequence2.contains(correctTokens.get(i))){

if (tokenprob >= min_truncate_prob_b){

min_truncate_prob_b = tokenprob;

bword = correctTokens.get(i);

}

}

}

//TODO

System.out.println(aword+" VS "+bword);

System.out.println(min_truncate_prob_a+" VS "+min_truncate_prob_b);

if (aword.length()>0 && min_truncate_prob_a < min_truncate_prob_b){

maxseqvaluableTokens -= 1 ;

littleword = maxSequence.replace(aword,"");

}else {

maxseq2valuableTokens -= 1 ;

String temp = maxSequence2;

if (maxSequence.contains(temp.replace(bword, ""))){

littleword = maxSequence2;

}

else littleword = maxSequence2.replace(bword,"");

}

if (maxseqvaluableTokens < maxseq2valuableTokens){

maxSequence = maxSequence2;

maxSequence2 = littleword;

}else {

maxSequence2 = littleword;

}

}

maxAndSecondMaxSeq[0] = maxSequence;

maxAndSecondMaxSeq[1] = maxSequence2;

return maxAndSecondMaxSeq ;

}

#p#分页标题#e#

3.5 返回矫正列表

public List<String> proofreadAndSuggest(String sInput){

//List<String> correctTokens = new ArrayList<String>();

List<String> correctedList = new ArrayList<String>();

List<String> crtTempList = new ArrayList<String>();

//TODO

Calendar startProcess = Calendar.getInstance();

char[] str2char = sInput.toCharArray();

String[] sInputResult = new String[str2char.length];//cwp.wordSegmentate(sInput);

for (int t=0;t<str2char.length;t++){

sInputResult[t] = String.valueOf(str2char[t]);

}

//String[] sInputResult = cwp.wordSegmentate(sInput);

//System.out.println(sInputResult);

// 查察本栏目

public static void main(String[] args) {

String argu1 = "movie.txt"; //movies name file

String argu2 = "movie_result.txt"; //words after segmenting name of all movies

SimpleDateFormat sdf=new SimpleDateFormat("HH:mm:ss");

String startInitTime = sdf.format(new java.util.Date());

System.out.println(startInitTime+" ---start initializing work---");

ChineseWordProofread cwp = new ChineseWordProofread(argu1,argu2);

String endInitTime = sdf.format(new java.util.Date());

System.out.println(endInitTime+" ---end initializing work---");

Scanner scanner = new Scanner(System.in);

while(true){

System.out.print("请输入影片名:");

String input = scanner.next();

if (input.equals("EXIT")) break;

cwp.proofreadAndSuggest(input);

}

scanner.close();

}

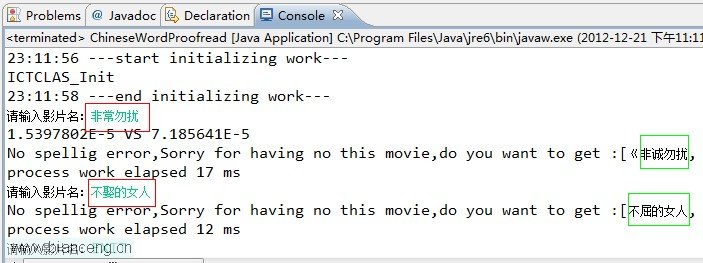

在我的呆板上尝试功效如下:

最后要说的是我用的语料库没有做太多处理惩罚,所以最后出来的有许多正确的功效,好比非诚勿扰会有《非诚勿扰十二月合集》等,这些只要在影片语料库上处理惩罚下即可;

尚有就是该模子不适合大局限在线数据,好比说搜索引擎中的自动校正可能叫智能提示,纵然在影视剧、动漫、综艺等影片的自动检测错误和矫正上本模子尚有许多晋升的处所,若您不惜惜键盘,请敲上你的想法,让我知道,让我们开源、开放、开心,最后源码在github上,可以本身点击ZIP下载后解压,在eclipse中建设工程wordproofread并将解压出来的所有文件copy到该工程下,即可运行。